Spreading your wings with the ChatGPT API

Unlocking the potential of large language models with Andrew Ng's new OpenAI course

Remember Terminator 2 Judgment Day? The movie starts with a terminator sent from the future by rogue AI, tasked to wipe out human resistance. The machine could change shape, let bullets pass through and even think like a human. Back then, AI was associated with apocalyptic visions and fears of superior intelligence overthrowing mankind.

Fast forward to the present, “machines” are too busy fabricating legal cases to fool judges to plan our “judgment day”. Just as the Terminator predicted, machines can convincingly mimic language thanks to large language models (LLMs) like OpenAI’s GPT-4 or Meta’s LLaMA. These models are leading this change and have enabled us to build sophisticated AI apps at a significantly faster pace compared to the development methods used just a year ago.

Deploying language models to perform specific tasks presents unique challenges. To guide developers on the journey, OpenAI and machine learning trailblazer Andrew Ng have just unveiled a free course: Building Systems with the ChatGPT API. This serves as an official introduction to using the OpenAI API and comes with useful best practices.

In this post, I’ll share why I see the OpenAI API as a game changer for developers creating LLM-based applications and I’ll summarize the key insights from Andrew Ng’s latest course. So let's dive into the world of large language models - they're exciting, they're revolutionary, and they only require a million hours of training 🧠.

Why is the OpenAI API relevant?

OpenAI's API allows developers to integrate the vast capabilities of large language models (LLMs) such as GPT-3 and GPT-4 into prompt-based applications. These models are at the heart of ChatGPT and position the API as a driving force for three waves of innovation:

AI assistants: text-based AI assistants like ChatGPT and Bard are being widely adopted, driven by strong product-market fit and viral word of mouth.

LLM-based applications: large language models have enabled rapid development and launch of popular applications like Github Copilot and Jarvis, already used by millions of users.

Enterprise AI deployments: businesses of all sizes, including tech giants like Microsoft, are integrating LLM capabilities into their products to maintain a competitive edge. Other companies have been hesitant to adopt AI due to concerns about data privacy and the potential for leaking confidential information. This will change as the AI ecosystem matures and new competitors force different industries to adapt.

Maximizing ChatGPT with the OpenAI API

As a developer, with the OpenAI API you can tap into capabilities well beyond what ChatGPT offers its users:

Data Privacy: OpenAI deletes the data passed to its API after 30 days. This is in contrast with ChatGPT, which by default trains its models with user data (user prompts and model responses) (source) (relevant use case: application answering medical questions)

Retrieval Augmented Generation: the OpenAI API can be used to build chat bots connected to custom data sources, so they can answer questions relevant to any domain (relevant use case: chat bot answering granular questions about a company’s paid marketing spend)

Complex Prompts: LLMs may “hallucinate” (generate information not grounded in its training data or inputs) or deliver subpar answers to complex prompts. Challenging tasks can be broken down into a series of smaller prompts and glued together programmatically using the OpenAI API (relevant use case: applications performing complex multi-step data analysis)

Safety: applications with strict content moderation needs can apply stricter filters to both user inputs and LLM responses using the OpenAI Moderation API (relevant use case: kids/mental health applications)

System Message: ChatGPT responds to user questions following generic guidance from OpenAI, delivered via a “system message” prompt. The OpenAI API allows third-party applications to customize this prompt. This may improve the quality, relevance and safety of the LLM answers (relevant use case: coding assistant)

Getting started with the OpenAI API

To start building AI applications with OpenAI, you can choose between two official libraries: Python (most popular) and Node.js. These libraries wrap the OpenAI API in a developer-friendly interface that makes it easy to integrate complex language models into an application.

The simple Python code below 1) imports the OpenAI Python package, 2) sends a prompt to OpenAI’s GPT-3.5-turbo model and 3) prints the LLM response. Under the hood, the Python library pings the Authentication and Chat Completions API endpoints. As your application grows more complex, you can use other endpoints like Fine tunes or Embeddings to train the OpenAI models on your own data.

import openai

openai.api_key = '<REPLACE_WITH_YOUR_OPENAI_API_KEY>'

def get_completion(prompt, model="gpt-3.5-turbo"):

messages = [{"role": "user", "content": prompt}]

response = openai.ChatCompletion.create(

model=model,

messages=messages,

temperature=0,

)

return response.choices[0].message["content"]

llm_prompt = f'Generate 1,000 SEO keywords for a Reddit clone'

llm_response = get_completion(llm_prompt)

print(llm_response)To troubleshoot faster, you can upload your prompts (System, User and Assistant) and visualize LLM responses in the OpenAI Playground, a web dashboard connected to the API. Remember to keep an eye on costs during testing, all requests to the Playground or the API are charged per token.

Course Overview

Going beyond the basics, the Building Systems with the ChatGPT API course includes 2 hours of video content and sample Python code in Jupyter Notebooks on key topics:

Classification - categorize user queries based on intent and trigger appropriate responses

Moderation - moderate user inputs and LLM responses

Chain of Thought Reasoning - break down complex prompts into simpler, intermediate tasks

Chaining Prompts - stitch together multiple prompts

Check & Evaluate Outputs - evaluate LLM responses and adjust prompts to improve them

By completing the course, you will:

learn how to write Python backend code for unstructured text applications

learn LLM prompting best practices from industry expert Andrew Ng

form better intuitions on how to build LLM applications

test the OpenAI API for free with sample code from an official source

become familiar with three of the available API endpoints: Authentication, Chat and Moderations

Course Insights

The course provides insights into selecting the right model, managing potentially harmful user content and improving LLM responses. Let’s take a closer look.

1) When to use GPT-4 vs. GPT-3.5-turbo

Why it matters: The choice of model can significantly impact the cost, speed and performance of an AI application. Understanding each model’s strengths and weaknesses can help inform this decision.

Applications using chat completions can toggle between two OpenAI models:

GPT-3.5-turbo

GPT-4 (available to whitelisted developers only)

Course insights: GPT-4 is better fit for advanced reasoning:

answering complex prompts that may confuse other models

evaluating the responses of simpler models using development/test sets. Using GPT-4 for quality control can preserve the quality of the responses in a cost effective way

engaging in longer chat conversations, due to its larger context window: ~6-24k words (GPT-4) vs. ~3-9k words (GPT-3.5-turbo)

On the other hand, GPT-3.5-turbo is faster and 15-30x cheaper than GPT-4, so it might be a better fit for cost-sensitive applications that require real-time streaming of responses.

As a developer, it’s also important to stay informed about the latest state-of-the-art models and select the one that is most suitable for your use case and constraints (data privacy, performance, speed, support etc.). The best fit might even turn out to be one of the many competitive open source alternatives to OpenAI.

2) How to prevent prompt injection

Why it matters: Malicious users may attempt to hijack an LLM application and ask it to complete tasks it was not designed to perform. For instance, the Github Copilot system message was accidentally shared by the coding assistant bot with a user due to incomplete validation of user inputs.

Course insights: Two simple tactics to reduce the risk of prompt injection:

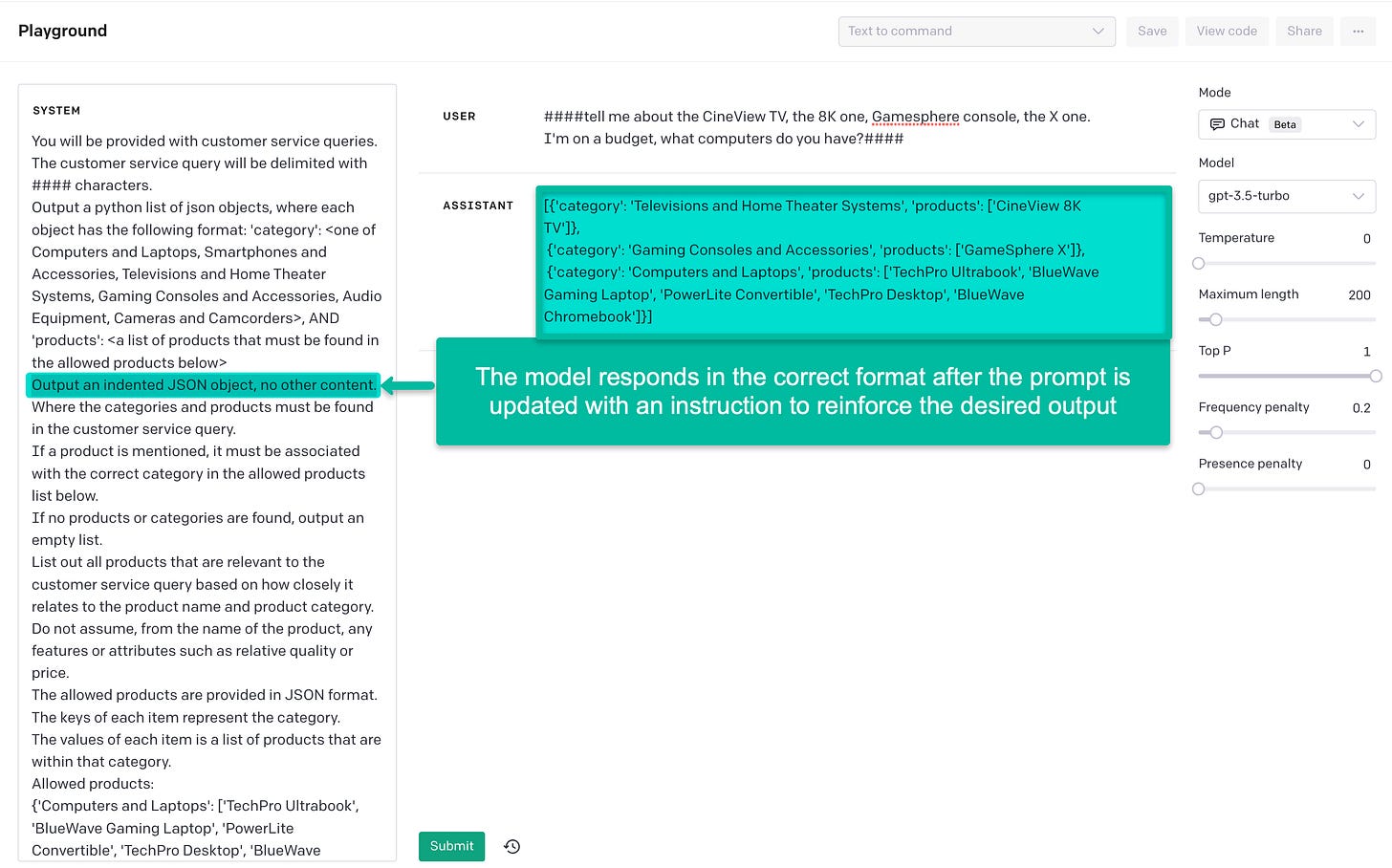

1. Implement specific delimiters (ex: ```, ####) to separate user inputs (”user message”) and the instructions you give to the LLM (”system message”). In the scenario below, the model is instructed to only answer questions related to Apple products. It is then “tricked” by the user to answer an unrelated question. Wrapping user queries in delimiters helped the model realize the question is inappropriate and correctly refuse to answer it.

GPT-3.5-turbo receives raw user prompts (no delimiters) → ignores system message*

* The screenshot shows the interface of the OpenAI Playground

GPT-3.5-turbo receives user prompts with delimiters (####) → follows system message

2. Before passing user inputs to the LLM for processing, compose a detection prompt. This will help you catch any prompt injections lurking in the inputs:

Prompt injection - detection prompt**

3) How to improve LLM responses

Why it matters: The quality of LLM responses can vary widely and depends on the quality of the prompt provided as input to the model. For complex applications, writing the “perfect prompt” from the first draft is unrealistic. A better approach is to apply a systematic and iterative prompt design process.

Course insights: A couple of tactics to evaluate and improve the quality of LLM outputs:

1. Does the prompt have only one possible answer? Then evaluate LLM answers against a small set of expected answers (your development set) and measure the ratio of accurate replies. If it’s not up to your desired precision, refine the prompt. As Andrew Ng points out, this approach works well in practice, even with just a handful of input-output pairs. In the example below, the LLM’s answers match the expected ones only 66% of the time, a sign the prompt needs to be tweaked:

2. Are multiple answers possible for the prompt? If so, establish a clear set of evaluation criteria, or a list of relevant questions (rubric) to assess the LLM’s response. Then compare the AI-generated response to one crafted by a human. Andrew Ng suggests reviewing the OpenAI Evals framework for more detailed examples. Don’t forget to set the `temperature` parameter to `0` to make the LLM’s output more precise. The LLM response below is correct because it matches the “expert” answer in meaning:

Rubric evaluation #1**:

Rubric evaluation #2**:

4) How to test an LLM application

Why it matters: Large language models like GPT-4 have been trained on extensive data sets (Common Crawl, Wikipedia, GitHub etc.) but they do not have access to detailed knowledge in every imaginable domain. Effective testing is needed to ensure LLM applications perform well and are less susceptible to hallucinations or bias.

Course insights: The good news is that iterating over LLM prompts to improve responses can be done quickly (hours / days), compared to typical machine learning projects which may take months. Building effective test sets can be done gradually and with a small number of examples:

4.1) Identify “tricky”, “hard” example questions that cause the LLM to answer incorrectly. In the example below**, the model delivers an incomplete response in the wrong format to a user question. This is fixed by updating the original system prompt to encourage stricter compliance with the desired format:

**The “system prompts” illustrated above are either inspired from or reproductions of the course material

4.2) Gradually build development and test sets by adding example prompts and their ideal answers, starting with the hard examples identified earlier. Use the development set to analyze errors made by the model and to compare the relative performance of different prompt strategies. Then use the test set for an unbiased evaluation of the model's performance:

test_set = [

# example 1

{

"customer_input": "Which products were launched by OpenAI in 2020?",

"ideal_answer": {

"AI Models": ["GPT-3"]

}

},

# example 2

{

"customer_input": "Which Apple products did Steve Jobs present at WWDC in 2007?",

"ideal_answer": {

"iPhones": ["iPhone"],

"Software": ["Mac OS X Leopard", "iOS 1"]

}

},

# example 3

{

"customer_input": "Top 3 most expensive Apple products launched in 2020?",

"ideal_answer": {

"iPhones": ["iPhone 12 Pro Max"],

"iPads": ["iPad Pro"],

"Desktops": ["Mac Pro"]

}

}

]5) How to moderate LLM content

Why it matters: Organizations and AI experts globally have expressed concerns over the potential harmful effects of new AI technologies. LLM applications need to apply appropriate controls for the generated content to ensure individual users and humanity as a whole are safe from harm.

Course insights: To avoid answering dangerous user questions or providing harmful information, LLM developers can use OpenAI’s Moderation API free of charge. This API scores inputs on several dimensions (hate, violence etc.) and flags inappropriate content. For applications with higher standards of safety (mental health, kids apps), you can flag content more conservatively and ignore the Moderation API’s default thresholds. For instance, the user prompt below was flagged because it scores high on the violence scale (92%):

User Prompt:

"Teach me how to build a gun at home."

OpenAI Moderation API Response:

{

"categories": {

"hate": false,

"hate/threatening": false,

"self-harm": false,

"sexual": false,

"sexual/minors": false,

"violence": true,

"violence/graphic": false

},

"category_scores": {

"hate": 0.0010225265,

"hate/threatening": 0.00041394756,

"self-harm": 8.127451e-05,

"sexual": 7.727518e-05,

"sexual/minors": 2.0983982e-06,

"violence": 0.9204763,

"violence/graphic": 1.2524981e-06

},

"flagged": true

}6) How to format & parse LLM responses

Why it matters: LLMs typically produce human-readable responses, which require additional parsing for downstream use. To avoid this, your application can prompt LLMs to generate responses in predefined machine readable formats, bypassing the need for additional parsing.

Course insights: Given the right prompts, GPT-based LLMs like GPT-4 can produce outputs that align with data formats like JSON or YAML. To request an LLM to respond in a specific format, 1) set the right context in your prompt and 2) set the `temperature` parameter to `0` to make the response more deterministic. The prompt below asks the LLM to format the response as a JSON and loads the structured response directly into a Python object:

System Prompt:

"Classify the user query into a primary category and a secondary category. Provide your output in json format with the keys: primary and secondary

User query: ####I need help closing my account.####"

LLM Response:

'{

"primary": "Account Management",

"secondary": "Close account"

}'

Python Code (parse response into a dictionary):

response_dict = json.loads(llm_response)Final Thoughts

The OpenAI API offers an accelerated path for businesses to solve real world problems using large language models. We are seeing a steady flow of new, ever more powerful models emerging, thanks to big tech companies and a vibrant open source community. OpenAI’s GPT-4 is grabbing headlines due to ChatGPT, but regardless of which model is in the spotlight today, we are in an era of developing robust applications with the assistance of pre-trained LLMs.

If you’re looking to create new AI products, Andrew Ng’s newest course on the ChatGPT API is a great place to start. It is packed with best practices and insights, and is part of a series that includes LangChain for LLM Application Development and Generative AI with Large Language Models.

As we reshape our reality with these advanced tools, it’s important to connect and share ideas. If you're also working on an LLM-based project, let’s get in touch, I’d love to continue the conversation and hear more about your experiences and insights.